How to Find the Right Video Capture System

You’ve selected your cameras, switchers and planned your staging. Now, you’ll need to select an ingest solution to capture your video production. This guide serves to elucidate the points that need to be considered when deciding on a video capture solution.

SHORTCUTS

- Record edit-ready formats

- Edit while capture

- Segment Record

- Editing Software

- Codecs required by post-production

- Resolution for final file delivery

- Support for IP, NDI, and SRT

- Support for streaming formats

- Capture2Cloud – direct to cloud ingest

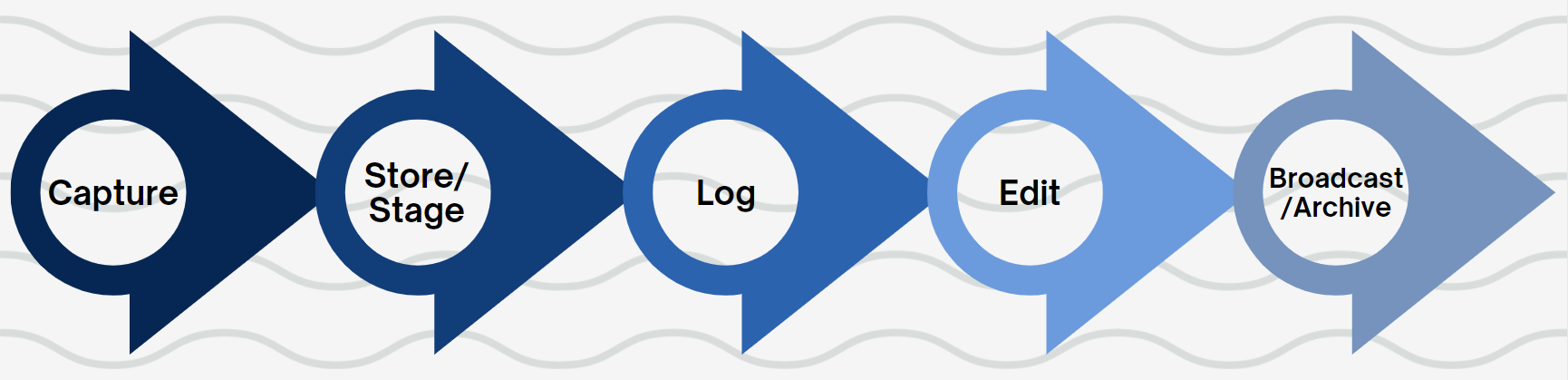

(I) PRODUCTION WORKFLOW GOALS

When choosing a video recorder, you’ll need to consider your workflow objectives. For instance, are you recording for near live streaming or a quick-turn broadcast like at a House of Worship? Are you recording a multicam studio project where the final output will take weeks of post production? Or perhaps you are recording material that will not be edited at all and will go straight to archive?

Videomaker has some excellent guides for multiple camera shoot planning. Check them out here.

Let’s define some workflow objectives:

i) NEAR LIVE BROADCAST OR STREAMING

In a near live broadcast workflow scenario, a director or producer will use a switcher to create a line cut of multiple cameras. The recorded line cut is then brought into editing software like Adobe After Effects or Media Composer where graphics and titles are added. This file is then exported and used for streaming. There is very little editing happening here since the turnaround time is typically 30 minutes or less.

ii) RECORDING FOR EDITING

In a multi-camera studio record situation, a show is recorded with multiple cameras on a studio set or stage. A typical record scenario would be 3 isolated or “ISO” cameras and 1 line cut, for a total of 4 recording channels. As discussed in the previous workflow, the director or producer directs the line cut recording which serves as a reference for the editing team.

iii) RECORDING FOR ARCHIVE

In scientific or surveillance environments, multiple feeds may need to be recorded for analysis, then archival. The end goal of this type of multi-camera recording is for archive. Considerations about the codec and wrapper are an important exercise due to inherent issues of longevity of support for the chosen format.

(II) FEATURES TO CONSIDER IN AN INGEST SOLUTION

There are a few must-have features for any multi-camera ingest solution. Most importantly, your capture system should enable you to

F-L-O-W from production to post to archive. Here are few that you should consider.

i) EASE OF USE / EASE OF SETUP:

Ideally, you will want a plug and play device. This means that you can plug in a source and start recording right away with minimum downtime and training.

ii) GANG CONTROL

Matching timecode across all record channels is a minimum requirement in multi-camera record sessions. You can achieve this through gang control of all the channels through an external trigger, or internally if this capability exists within the record system. Depending on the scale of your video production, from dozens to hundreds or thousands of files may be delivered to post or reviewed for playback. The same timecode across all channels serves as critical reference points for editing or playback.

iii) FLEXIBLE FILE NAMING

File names serve as important metadata for the editorial process. For instance, if you are recording a daily children’s show, useful information in the file name would include the name of the puppet, the talent’s name, the scene and take number, etc… This information provides useful reference for the editor when editing a sequence.

iv) FLOWING FROM PRODUCTION TO POST

Another consideration are steps you can take to have minimal delay and/or error with your recorded material so that you can f-l-o-w from production to post. Here are items that you should be considering.

- Network Recording:

- Recording directly to a network destination eliminates the downtime of transferring your media from one location to another, for instance from camera cards on set. If you’ve recorded 8+ hours of media across a typical multicam scenario of 3 ISO and 1 Linecut, then that’s 32 hours of media that needs to be copied to another location. The entire data copy process can easily take several hours or more!

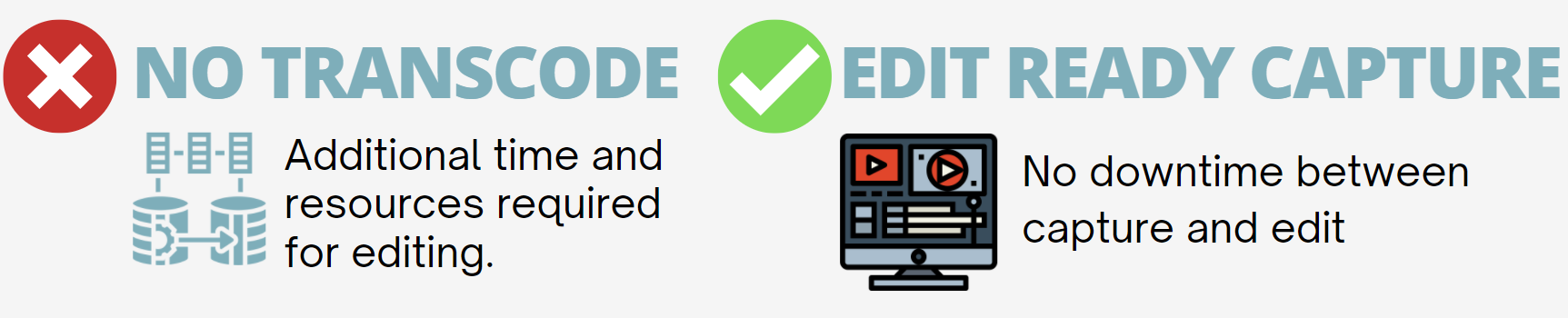

- Edit Ready formats

- Transcoding was a typical part of the multi-cam workflow due to on-camera recorders or standalone recorders not being able to create the file types that post-production needed for editing. This is an old paradigm. Many recorders can now create edit ready material so that an additional step of transcoding isn’t necessary for post production.

- Primary / Proxy File Creation

- A 4K program may be your ultimate goal. However, editing with a 4K file can be a massive and unnecessary drain on system resources. Instead, edit with a proxy file, like an H.264 or similar. Then, matchback to your 4K file once your edit, color and VFX are in place.

- Record Redundancy

- Regardless of size, you will want to safeguard the investment that you’ve made in your production. Using a system where you can create a concurrent backup copy of your material to a separate location is critical to the success of your production.

v) RESTFUL API

- Interoperability is key to creating a smooth workflow. A RESTful API ensures that any product can control and integrate with any other product.

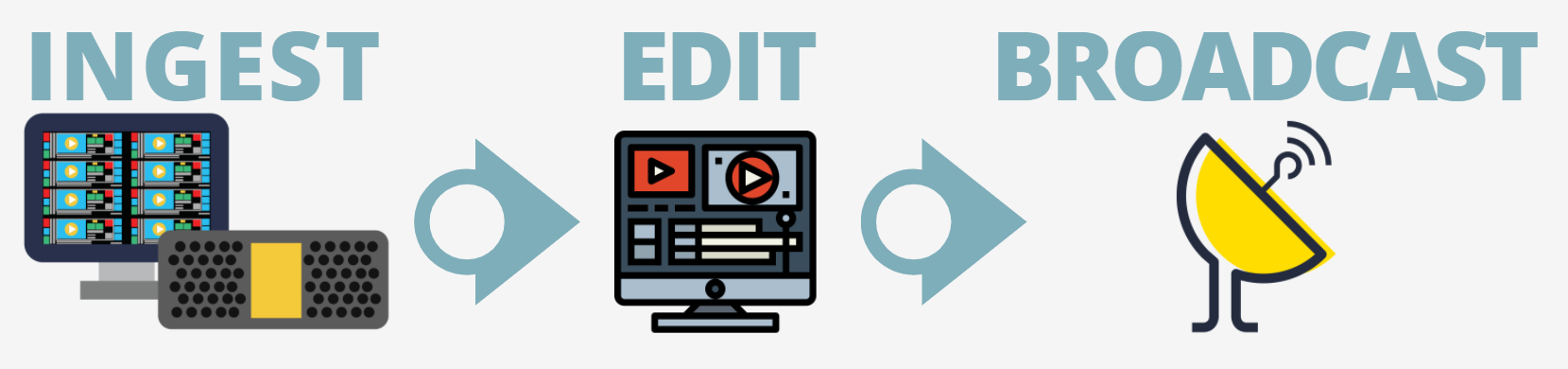

(III) PRODUCTION TURN TIME

Depending on the type of production, you may need to make your recorded material available asap. For instance, do you need to air your edited material near live? (e.g. 30min from when it started recording). Here are some strategies to consider for productions that require a fast turnaround.

i) RECORD EDIT READY FORMATS

As discussed previously, capturing edit ready materials eliminates the time required for transcoding. Once the recording stops, your files are ready to be edited straightaway.

ii) EDIT WHILE CAPTURE

This record style lets you edit a file while it’s growing. Typically, it’s only available for certain codecs, wrappers and support may vary by NLE. For instance, a popular edit while capture workflow includes XDCAM in an Op1A MXF wrapper, editing in Adobe Premiere. Premiere auto-refreshes the editing timeline so that you can see new material that’s available to edit. One drawback for this workflow is preserving file integrity. When you have more than one person editing the sequence, there’s a chance the file itself can get corrupted. It’s important to coordinate with the post production team to avoid issues like this.

iii) SEGMENT RECORD

Sometimes you have record scenarios like surveillance or reality tv where you record for an unspecified amount of time. The end result could be a file hours long which can be both unwieldy and more easily corruptible. To offset this risk, you can use a record style called segment record (chunking) where the file is broken up into 30 min intervals, for instance. At the 30 min mark, the current segment (file) closes and a new file opens seamlessly, without dropping frames. The closed segments are available to be safely edited, streamed, etc…

(IV) POST PRODUCTION

For the majority of productions, the end goal is to create an edited sequence. The production doesn’t end when the director yells “Cut!” Rather, the materials delivered to editing are transformed into a narrative through the editing, color and VFX process.

For the majority of productions, the end goal is to create an edited sequence. The production doesn’t end when the director yells “Cut!” Rather, the materials delivered to editing are transformed into a narrative through the editing, color and VFX process.

To ensure the handoff to post is as smooth as possible, production planning needs to fold in plans for the post-production workflow. Here are some of the items that need to be discussed with the post production team.

i) EDITING SOFTWARE

Certain codecs and wrappers are better suited for certain editing software. For instance, if Avid Media Composer is used as the editing software, the file type you will want delivered to post production will be something in the Avid native wrapper, MXF OpAtom. If this file type isn’t created during the record, then it will need to be transcoded – adding unnecessary downtime.

ii) CODECS REQUIRED BY POST PRODUCTION

Generally, all editing software are capable of handling any number of file types and wrappers well – that is, moving around files for editing and playback so there’s minimal lag. There are exceptions to this case, however. For example, XAVC is a difficult codec to move around because playback of XAVC requires system resource intensive decoding and will lag or stall an edit system. Therefore, it’s not an ideal codec to deliver to post-production.

iii) WHAT RESOLUTION WILL YOU BE DELIVERING YOUR FINAL FILE

If 4K is your target delivery resolution, then you will want to record your primary record resolution as 4K or greater while also recording a matching proxy format. The proxy format can be a lightweight HD H.264, DNxHD 36 or even ProRes Proxy with matching timecode to the high resolutions file. During final conform, the high resolution file will matchback to the edit material because the timecode matches between the primary and proxy files.

(V) HOW CAN I FUTURE-PROOF MY WORKFLOW?

Video production has been through several technology revolutions, with each successive wave of change arriving at shorter intervals. A workflow that was adopted 10 years ago is likely outdated, but a silo mentality can start to develop because stakeholders may not want to innovate on a process that works well.

Video production has been through several technology revolutions, with each successive wave of change arriving at shorter intervals. A workflow that was adopted 10 years ago is likely outdated, but a silo mentality can start to develop because stakeholders may not want to innovate on a process that works well.

i) SUPPORT NEW IP SIGNALS FOR I/O

Advances in computing power and network/internet bandwidth, especially on the upload side, make it possible to compress and transmit digitized video streams over private IP networks and the Internet using standard IP protocols and video can be adjusted to different data rates to accommodate the available bandwidth.

IP-based video allows each machine in an IP-connected environment to act as a stand-alone source, and to act as its own switchboard of sources. Every live streaming source can be cut into any other live broadcast in any machine connected to the IP video environment. This opens up vast new possibilities for collaboration and content creation using both traditional and non-traditional sources, such as live streams from user devices on location.

The same IP infrastructure can be used to replace ageing SDI facilities with commodity hardware and software-based tools to replace traditional switching, routing and monitoring equipment. Protocols such as SMPTE-2110 and NDI provide solutions with optimization for different use cases. NDI is far simpler to implement, while SMPTE-2110 is a more sophisticated replacement of SDI, breaking out each essence as its own, separately available stream.

ii) SUPPORT STREAMING FORMATS

As social media and remote working applications grow more prevalent, both direct streaming of content and recording of incoming streaming feeds is becoming increasingly important.

A signal format gaining popularity is SRT an open source transport protocol developed by Haivision. A studio or facility can leverage their existing network infrastructure to record video signals point to point, allowing for a replacement to traditionally expensive satellite transmission. Because no new and expensive infrastructure is required SRT continues to gain traction in newer facilities and upgrades.